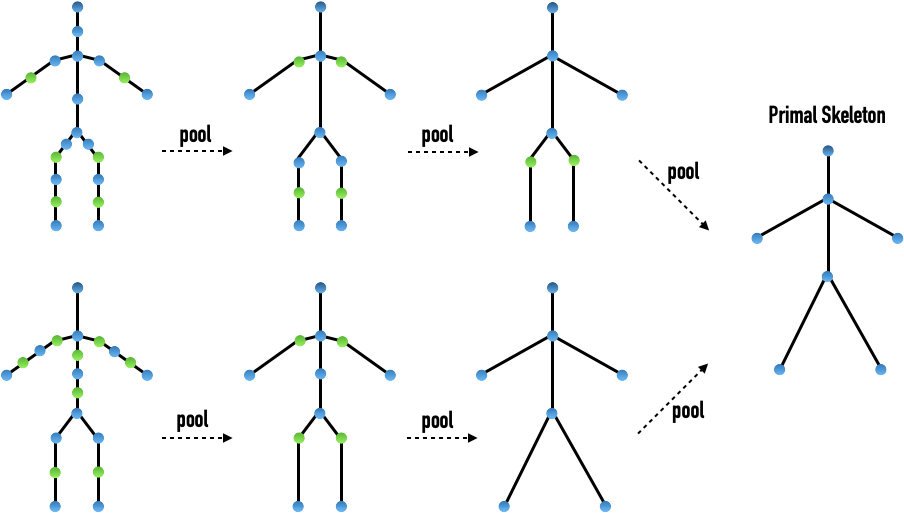

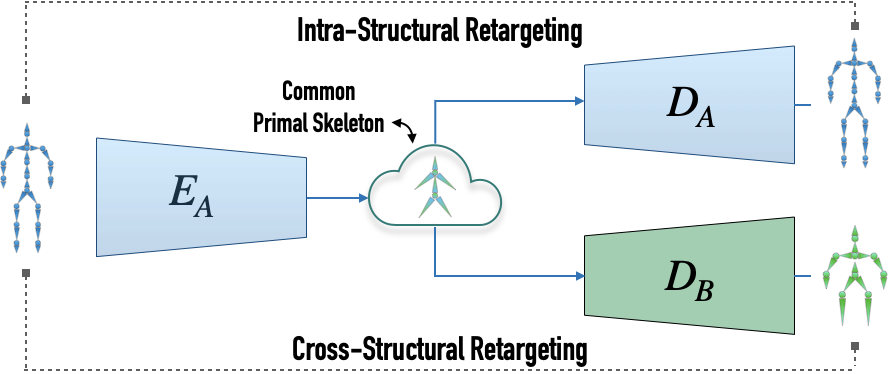

We introduce a novel deep learning framework for data-driven motion retargeting between skeletons, which may have different structure, yet corresponding to homeomorphic graphs. Importantly, our approach learns how to retarget without requiring any explicit pairing between the motions in the training set. We leverage the fact that different homeomorphic skeletons may be reduced to a common primal skeleton by a sequence of edge merging operations, which we refer to as skeletal pooling.

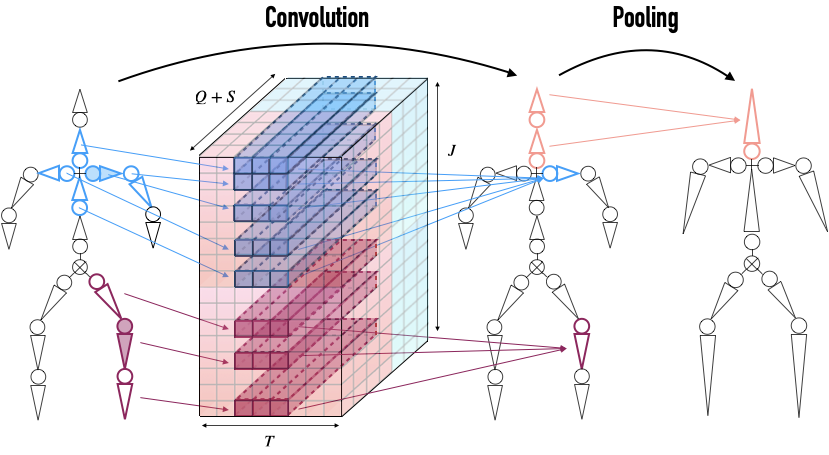

Our main technical contribution is the introduction of novel differentiable convolution, pooling, and unpooling operators, which are skeleton-aware, meaning that they explicitly account for the skeleton's hierarchical structure and joint adjacency.

Our operators form the building blocks of a new deep motion processing framework that embeds the motion into a common latent space, shared by a collection of homeomorphic skeletons. Thus, retargeting can be achieved simply by encoding to, and decoding from this latent space.

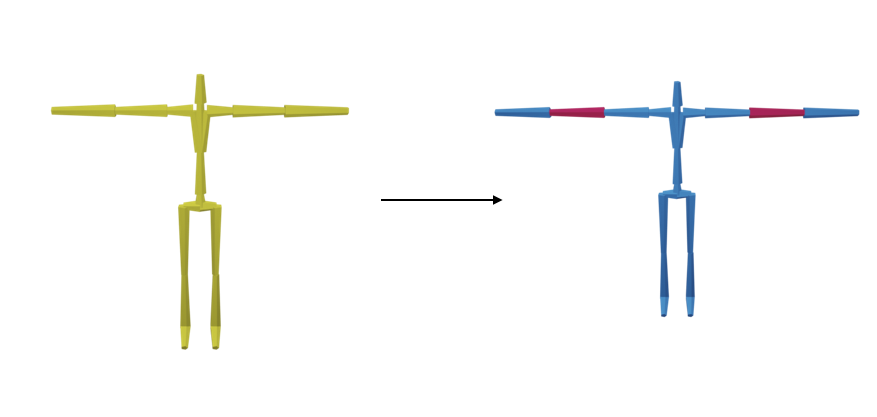

Our method can retarget motion to asymmetric characters or character with missing/extra bones:

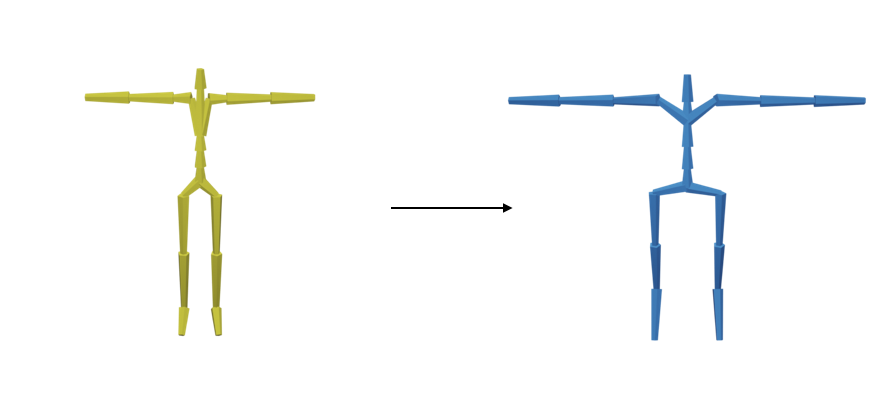

Our framework can be used also for retargeting of skeletons with the same structure, but different proportions. Here our method is compared to a naive adaptation of CycleGAN [Zhu et al. 2017] to the motion domain and to NKN of Villegas et al. [2018]. The outputs are overlaid with the ground truth (green skeleton):

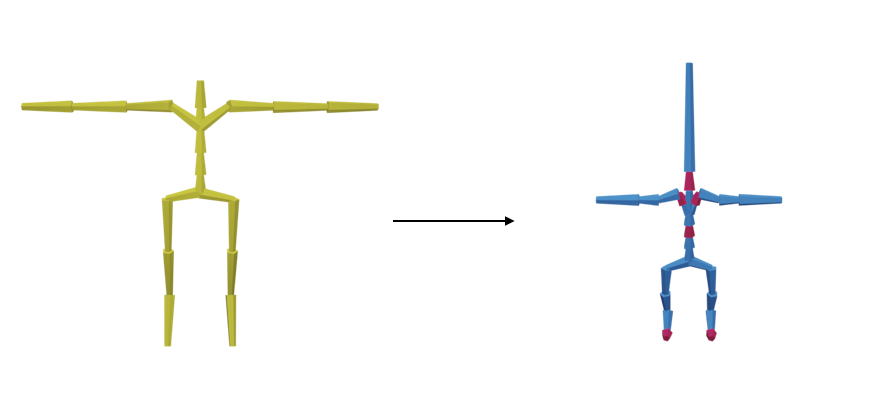

Our method is compared to a naive adaptation of CycleGAN [Zhu et al. 2017] to the motion domain and to a cross-structural version of NKN of Villegas et al. [2018]. The outputs are overlaid with the ground truth (green skeleton):

@article{aberman2020skeleton,

author = {Aberman, Kfir and Li, Peizhuo and Lischinski, Dani and Sorkine-Hornung, Olga and Cohen-Or, Daniel and Chen, Baoquan},

title = {Skeleton-Aware Networks for Deep Motion Retargeting},

journal = {ACM Transactions on Graphics (TOG)},

volume = {39},

number = {4},

pages = {62},

year = {2020},

publisher = {ACM}

}