We present a novel data-driven framework for motion style transfer, which learns from an unpaired collection of motions with style labels, and enables transferring motion styles not observed during training. Furthermore, our framework is able to extract motion styles directly from videos, bypassing 3D reconstruction - an ability which enables one to extend the set of style examples far beyond motions captured by MoCap systems.

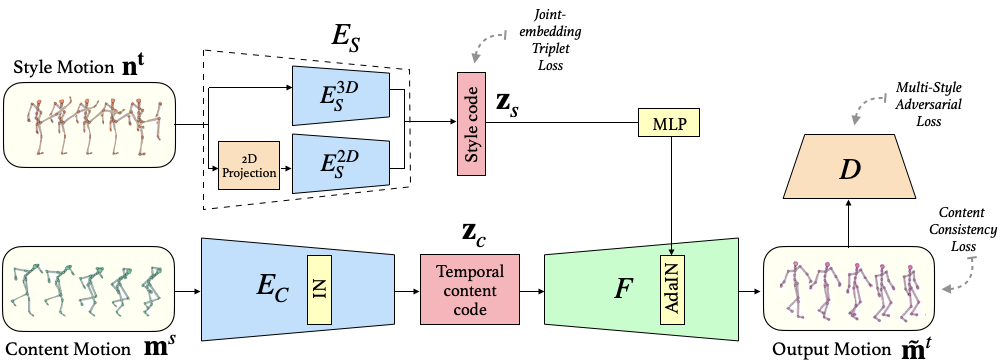

Our style transfer network encodes motions into two latent codes, for content and for style, each of which plays a different role in the decoding (synthesis) process. While the content code is decoded into the output motion by several temporal convolutional layers, the style code modifies deep features via temporally invariant adaptive instance normalization (AdaIN).

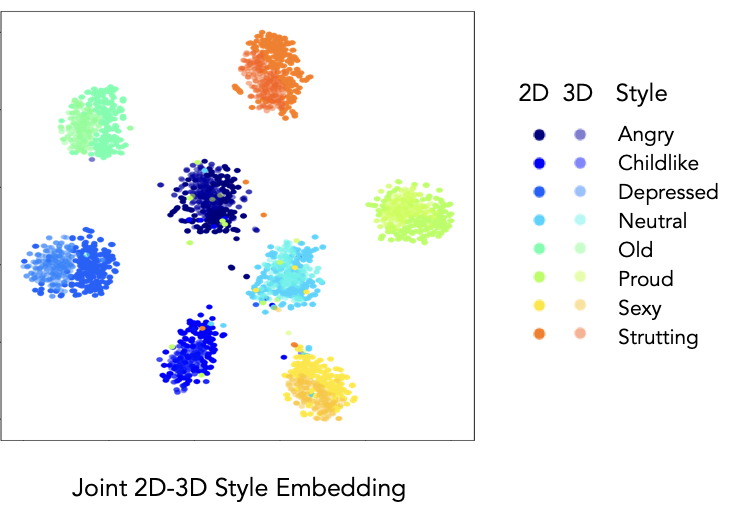

While the content code is encoded from 3D joint rotations, we learn a common embedding for style from either 3D or 2D joint positions, enabling style extraction from videos.

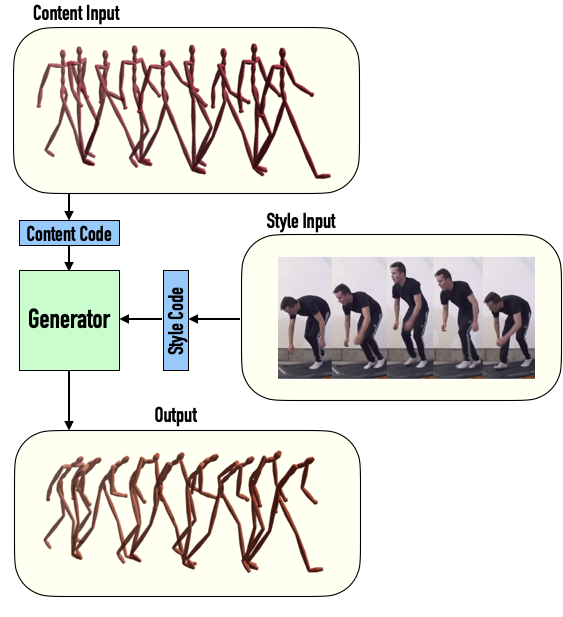

Given content and style input motions our network can synthesize novel motions that exhibit the content of one input and the style of the other.

Our network can infer style from 2D key-points extracted from video and apply it to 3D animations.

Qualitative comparison of our method to the approach of Holden et al. [2016].

Our learned continuous style code space can be used to interpolate between styles. Style interpolation can be achieved by linearly interpolating between style code, and then decoding the results through our decoder.

@article{aberman2020unpaired,

author = {Aberman, Kfir and Weng, Yijia and Lischinski, Dani and Cohen-Or, Daniel and Chen, Baoquan},

title = {Unpaired Motion Style Transfer from Video to Animation},

journal = {ACM Transactions on Graphics (TOG)},

volume = {39},

number = {4},

pages = {64},

year = {2020},

publisher = {ACM}

}